Is NVMe-oF the Future of Storage?

Over the past several years, there has been a lot of talk about NVMe – or non-volatile memory express. Even with its heightened popularity, adoption rates for NVMe have been low in most enterprise data center environments. More and more vendors are also increasingly supporting NVMe over Fabrics (NVMe-oF). But what does this mean for a normal enterprise data center?

NVMe vs SCSI

It’s important to first understand NVMe itself.When it first hit the market, many people assumed it was just a new, faster SSD. In reality, NVMe is a new storage protocol created from scratch with performance in mind. This allows us to take full advantage of the speed of modern SSD drives and storage-class memory (SCM).

Previous generations of hard drives include serial-attached SCSI (SAS) and serial ATA (SATA.) All operating systems communicated with these devices using the SAS and SATA protocols. SAS uses SCSI commands, which were formally adopted by the American National Standards Institute in 1986. SCSI is reliable but has become bloated over the years. SCSI has over 250 commands in the spec. There are commands for storage I/O, of course, but it also includes commands for printers, tape devices, and audio devices.

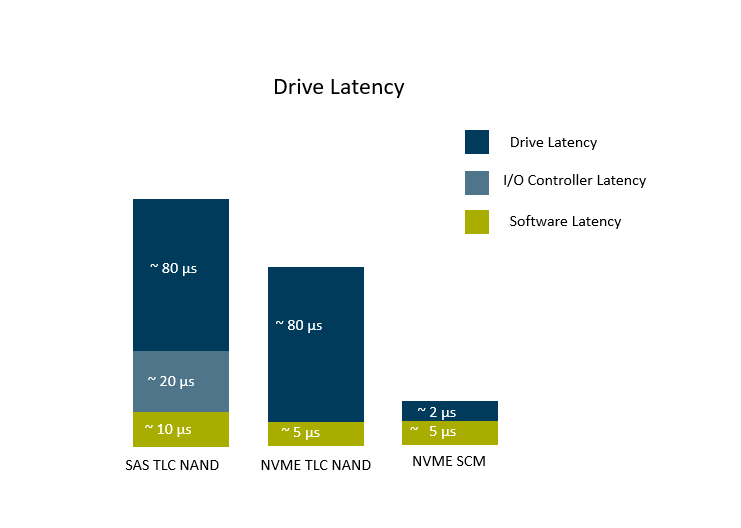

On the other hand, the NVMe spec has only 13 commands, with only 3 dedicated to I/O. It also greatly increases the number of queues and queue depth, allowing for more simultaneous operations over SCSI. This means that by switching to the NVMe protocol, ~30% of latency is removed versus SAS SSDs.

NVMe over Fabrics

When NVMe devices were first released, they required connectivity directly to the PCIe bus of a server. This direct-attached storage (DAS) limited adoption to point solutions within the data centers – and using them meant you lost the enterprise SAN features we have all become accustomed to, like storage snapshots and storage-based replication.

NVMe-oF solves this problem. It connects servers to remote NVMe devices over a storage fabric and allows them to communicate as if they were plugged directly into the PCIe bus. Now we can take advantage of the NVMe protocol using storage on an enterprise SAN and gain all the features back that were lost with DAS implementations. Originally, OS support was limited to a few Linux distributions. Now with the release of VMware ESXi 7.0, NVMe-oF-capable operating systems are a reality for most enterprise customers.

Types of Fabrics

With NVMe-oF, there are 3 options for the storage Fabrics. Each has its own pros and cons.

Fibre Channel

Customers with 32gb Fibre Channel (FC) in their environment have the option of running the NVMe protocol over the same storage fabric as FC, allowing for an easy transition. This might be a good option for existing FC customer. Howevera non-FC customer might not want an additional set of infrastructure to support storage.

RDMA

RDMA options include InfiniBand, RoCE, and iWARP. InfiniBand is super-low latency hardware and is perfect for low latency applications but requires specialized hardware. RoCE runs over ethernet – which is an advantage – but isn’t widely supported. All 3 options are niche solutions that may not be common in the typical enterprise data center.

TCP

In 2018, TCP was added as a new transport option. This means it is relatively new and has a lack of major vendor support. But, once adopted, it will allow enterprises to run NVMe over the 25/40/100gb ethernet infrastructure being installed today.

Modernizing with NVME-oF

While operating systems have been slow to adopt NVMe-oF, storage and storage fabric vendors have been ready. Keep it in mind as you are modernizing your data center or if you have an application that needs an extra performance boost. When evaluating technologies, remember that just having the drives in your storage system won’t give you the full performance gain. You will need to implement a storage fabric and operating systems that support end-to-end NVMe protocol support to see the full benefits.