Understanding Azure Functions to Maintain High Availability

Azure Functions is a serverless compute platform that offers a highly scalable, event-driven experience for developers. Sometimes known as Function-as-a-Service, serverless architecture allows developers to run small pieces of code without having to think about the infrastructure on which it is executed. Azure Functions gets rid of the need to manage or provision servers, and lets developers work in their choice of coding language.

At ivision, we use Azure Functions to accelerate and simplify integrations between various solutions and customer environments. Functions offers a large improvement over previous on-premises solutions that did not scale well. Not only has it enabled us to ingest a significantly larger amount of tickets than previously possible, it offers endless customizations in alert delivery.

Consumption Plan for Azure Functions

Azure Functions has multiple options for pricing plans, including an App Service plan and a new Premium plan. The classic serverless option, though, is the Consumption plan. Consumption is exactly what it sounds like: you are billed purely based on your actual consumption. Functions are charged per actual executions, so the cost grows alongside the application demand.

Because Azure Functions is highly scalable, it can bring enough capacity to serve your largest demands – or it can scale down to zero when not in use. Previously, multiple applications would run together on a shared infrastructure, making it incredibly complicated to determine the cost of any one component. With the consumption plan, you are paying per actual use and can manage and understand the exact cost of each component.

Maintain High Availability with Azure Functions

There are a few things to consider when using Azure Functions on the consumption plan. While the serverless model allows you to not worry about the instance’s host or environment, it still does not entirely protect from hardware issues. For example, a region outage – while rare – would take all integrations offline until the issue is resolved by Microsoft.

If a region were irrecoverably damaged, you would lose things such as application settings and function keys that are only stored in the single region. The recovery time from such an event would be long beyond any acceptable outage timeframe.

Use a Secondary Region

One solution for this concern is to set up a secondary region with all the same production apps. With both regions behind the same traffic manager and a custom CNAME that points to the traffic manager, no external updates are required for routing to update. This does require that both regions be pointed to the same repository; however, the backend storage is different for the application itself. This means that the two regions operate completely independently.

Importantly, the Consumption plan differs from the always-on App Service plan. Consumption doesn’t give access to some of the backup options which could assist with the replication process. This means that if you want to stay on the Consumption plan, you need to copy the data yourselves.

Avoid Duplicate Functions from Timer Triggers

Our generic model for all integrations at ivision is having HTTP triggers that write to storage queues which are processed asynchronously. This prevents HTTP response timeouts on long-running functions and frees up the referring service/thread to continue processing. For some cleanup tasks, though, we cannot avoid using a timer trigger. This becomes a problem when there are duplicate functions in separate regions, as both will run. While it wouldn’t automatically have a negative effect, this would perform unnecessary duplicate corrective actions.

To mitigate this issue, we leave the function apps with scheduled jobs that are not currently the primary location in the disabled state. This means that those locations will not automatically failover in the event of an outage as they will need to be turned on – which can be scripted.

To copy the application settings and function keys, we must access two separate APIs for each individual function app:

- Azure Management API

- Kudu API

Update Application Settings With Azure Management API

Click Here to follow along with code below

The Azure Management API stores the application settings and connection strings. These can be retrieved with the Invoke-AzureRmResourceAction cmdlet and set with the New-AzureRmResource cmdlet.

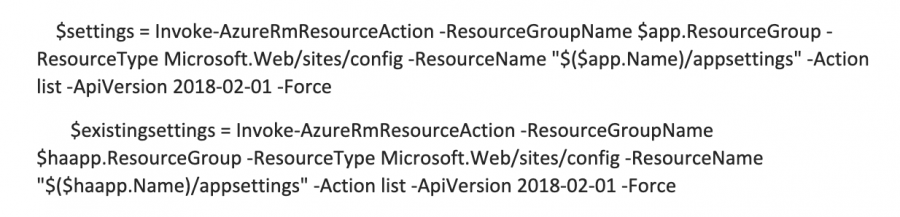

So, if we get both the settings from the primary app and the settings already in the HA app:

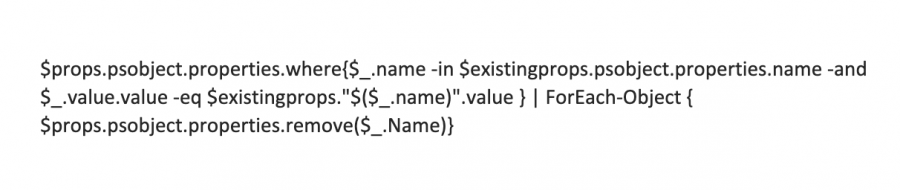

We can then compare both sets for differences in both names and values:

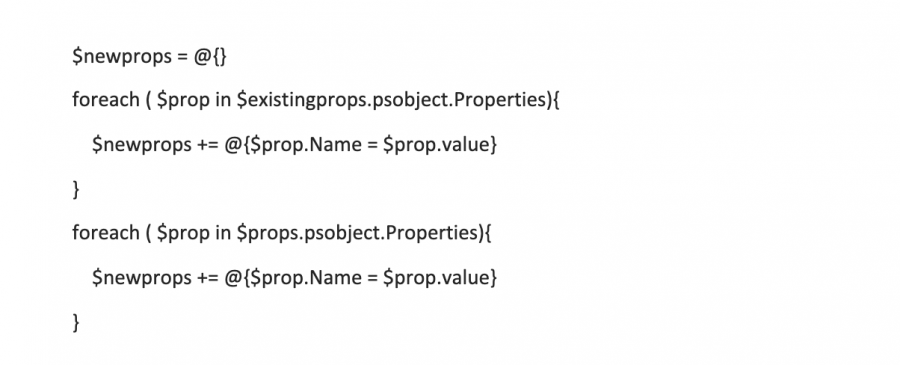

The New-AzureRmResource cmdlet expects a hash table so we reformat the properties:

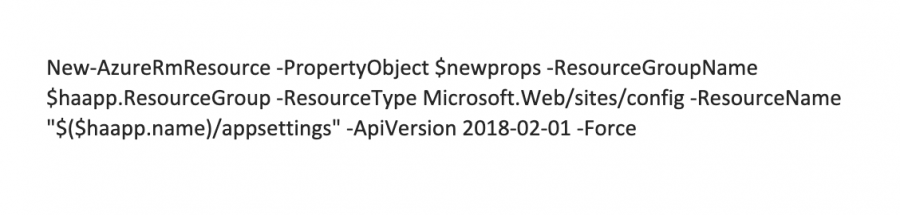

Then, we can post back to the API with only the changed values. Note that we did not want automated removal of properties. This will only add and modify existing properties:

That’s all it takes to update the application settings. Connection strings can be copied in the same fashion.

Retrieve Function Keys Using Kudu API

To retrieve Function Keys, we must connect to the Kudu API, which requires the application to be running. This means that for the time period that these are copying, the site must be on if it is one of the timer applications that we leave off in the HA region.

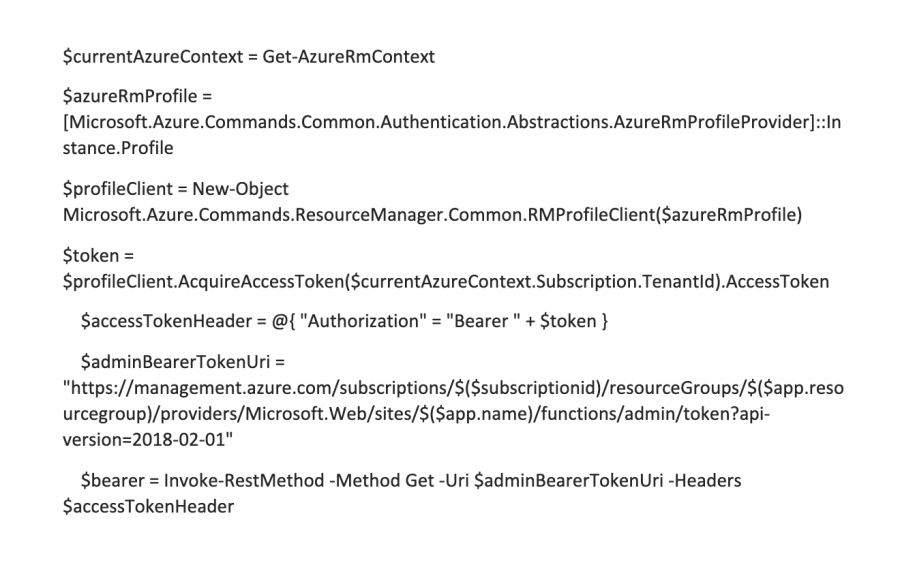

To connect to the Kudu API, we must have a bearer token specific to that application. We can retrieve that from the Azure Management API:

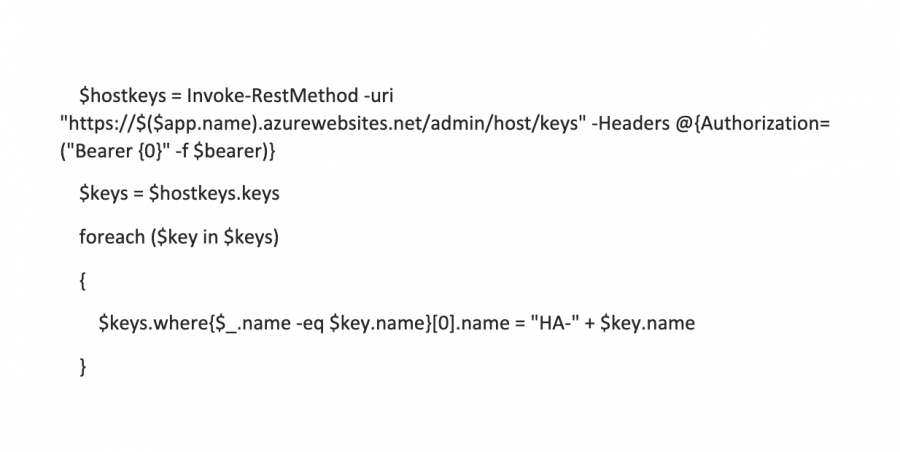

There are both host keys and function keys, so we get the host keys first. To avoid overwriting existing HA keys, prepend “HA-” before each key name:

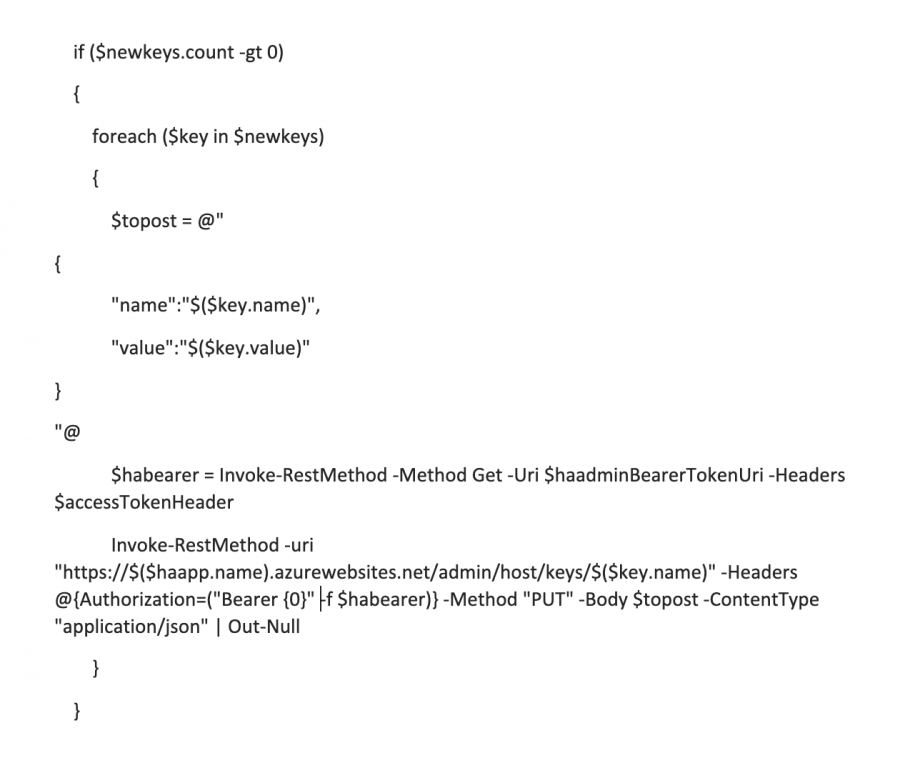

Do the same operation as before to compare the primary and HA key sets, then post back to Kudu. Since this is an http rest call, it is expecting a JSON payload, not a hash table:

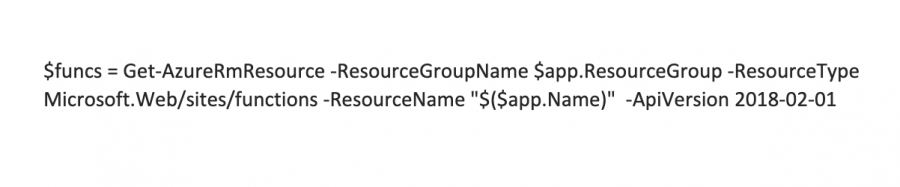

Now that our host keys are updated, we need a list of functions to update as well. This can be retrieved from the Azure Management API:

Then we can loop through the functions and update their keys the same way as the host keys but with a different uri:

https://$($app.name).azurewebsites.net/admin/functions/$($name)/keys

Click Here for all code shown above

Simplify and Scale with Azure Functions

This covers the replication for application settings and function keys. We do keep a few tables of data within Azure that these functions use. These started out in an Azure Table implementation, but to give us more control over the failover we have moved them to Azure Tables within Cosmos DB. This lets us perform manual failovers if we detect an issue prior to an Azure failure declaration.

These scheduled copies of application settings and function keys in addition to Cosmos replication allow us to have a manual or automated failover that would complete within minutes with minimal human intervention. By using Azure Functions in this way, we can simplify and scale interactions with our customers while ensuring zero downtime for their environments.

ivision Global Service Center

Our Global Service Center works 24/7/365 to resolve potential issues before they impact your business. ivision’s highly skilled engineers proactively monitor your environment so that you can run efficiently with zero downtime. Sleep better knowing that our team is working around the clock for your success.